Robotic systems are becoming an increasingly important part of humanity, but today only a tiny fraction of the potential of such systems is being exploited to automate and render monotonous, dangerous, and resource-intensive work, to raise public welfare, and to make people’s lives safer and more convenient. To successfully perform complex tasks in changing conditions, robots require both intelligence for adaptive decision-making and motor control, and the ability to accurately perceive the environment. The mission of EDI is to give robots this power. For EDI scientists, it is a great opportunity to apply digital technology, accumulated knowledge, and years of experience in the fields of image processing, signal processing, and artificial intelligence to address the challenges of robotics and machine perception in several areas:

- industry 4.0;

- agriculture;

- autonomous and cooperative driving.

One of our challenges is the use of innovative digital technologies in the next generation industry, with the aim of increasing the quality and efficiency of European industrial production. To achieve this in a rapidly changing production environment, key prerequisites are robots able to adapt quickly to new production conditions and handle unpredictable situations, minimizing production downtime. EDI solutions in this field include placement of cameras, depth sensors, and other sensors around and on an industrial robot arm, and the processing of signals from these sensors using known and novel techniques.

An example of EDI development is an adaptive robotic system that can recognize and sort various randomly placed objects from a box using various sensors, computer vision, and deep learning. Such intelligence-enabled robotic arm is able to adapt to unexpected situations in today’s dynamic manufacturing environment.

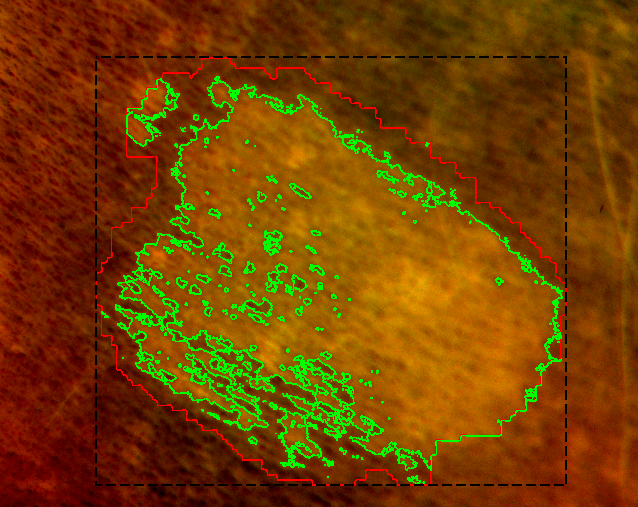

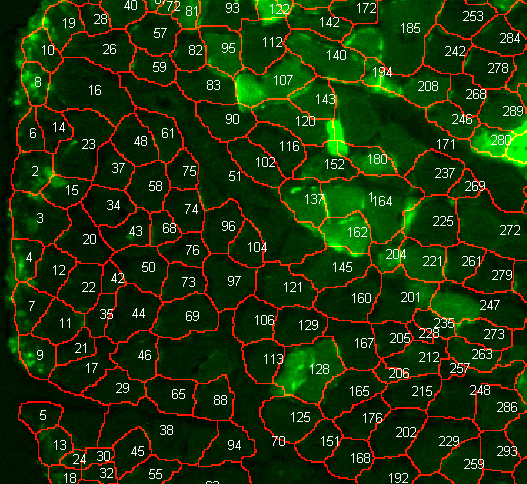

Another example is a weed controlling system for agriculture, which aims to clean the weeds off the fields tirelessly and efficiently, and without the use of substances harmful to health and the environment. For such a system, EDI developed a computer vision module that can distinguish between crops and weeds and can control the movement of the robot to cover the entire field.

EDI is also working on machine perception solutions for autonomous driving, which has the potential both to increase road safety and to efficiently automate the transportation of goods and resources. Developing reliable perception systems is an important challenge, so the EDI self-driving car demonstrator uses multiple sensors (cameras, radars, lidars) and fail-aware/fail-operational artificial intelligence models, which we train to work even when some sensors are defective. A significant focus of our research is on cooperative vehicles and systems, thus embedded intelligence is augmented with communication protocols and cooperative decisions.

In all the above-mentioned areas, EDI develops artificial intelligence techniques based on both classical signal processing approaches and machine learning approaches (deep learning with artificial neural networks, convolutional and recurrent neural networks, generative adversarial networks). The developments in artificial intelligence are key tools in the digitalization process – they offer tremendous opportunities for automation of various processes and economic potential for start-ups and new markets. EDI has the experience and accumulated knowledge over many years in the field of signal processing research and application, providing a fundamental basis for continuing the tradition of signal processing in many applications with artificial intelligence techniques. EDI has extensive experience in computer visioning – image classification, segmentation, and object detection. In addition to these applications, EDI has addressed such tasks as satellite imagery segmentation, vehicle detection, skin lesion classification (normal/suspicious), and human identification by blood vessels of the hand. In cooperation with companies, we have also developed, at their request, a car license plate recognition system, a video game competition analysis software, and a silicon crystal production monitoring system for more accurate crystal growth control.

A precondition for the successful use of supervised machine learning is the availability of labeled training data. One of the most important fundamental areas to be addressed in the field of artificial intelligence is unsupervised and few-shot learning, where neural networks can be trained without or with a limited number of training examples. This would allow the successful use of modern artificial intelligence techniques in a wider range of tasks, not only in data-rich applications. This challenge is also tackled by EDI researchers, whose developments include methods to accelerate data labeling as well as approaches to generate synthetic training data.

In the context of fundamental artificial intelligence research, EDI is interested in:

- transferring models trained in virtual reality to the real world environments;

- imitation learning (robots are learning the tasks from a demonstration by humans);

- trustworthy and explainable AI;

- bio-inspired navigation systems.

An important line of EDI research is Embedded Intelligence, which processes sensor-generated information as close to the source as possible. Data processing and decision-making are shifted away from data centers and cloud computing to the border between the cloud and the physical world (edge/fog computing). Embedded machine-perception algorithms in specialized chips, such as FPGAs and SoCs, allow for low-latency and energy-efficient solutions. By combining this approach with the development of computationally efficient algorithms, we are able to extend autonomy to power-limited technologies such as small unmanned aerial vehicles.

Projects

-

Artificial Intelligence for Digitizing Industry (AI4DI) #H2020

Artificial Intelligence for Digitizing Industry (AI4DI) #H2020

-

Development of a robotic weed management equipment (RONIN) #ESIF

Development of a robotic weed management equipment (RONIN) #ESIF

-

Framework of key enabling technologies for safe and autonomous drones applications (COMP4DRONES) #H2020

Framework of key enabling technologies for safe and autonomous drones applications (COMP4DRONES) #H2020

-

Integration of reliable technologies for protection against Covid-19 in healthcare and high-risk areas (COV-CLEAN) #SRP (VPP)

Integration of reliable technologies for protection against Covid-19 in healthcare and high-risk areas (COV-CLEAN) #SRP (VPP)

-

Artifical intelligence for more precise diagnostics (AI4DIAG) #ESIF

Artifical intelligence for more precise diagnostics (AI4DIAG) #ESIF

- Automated hand washing quality control and hand washing quality evaluation system with real-time feedback (Handwash) #LCS (LZP)

-

Programmable Systems for Intelligence in Automobiles (PRYSTINE) #H2020

Programmable Systems for Intelligence in Automobiles (PRYSTINE) #H2020

-

Vision, Identification, with Z-sensing Technology and key Applications (VIZTA) #H2020

Vision, Identification, with Z-sensing Technology and key Applications (VIZTA) #H2020

-

Pētījums par datorredzes paņēmienu attīstību industrijas procesu norises automatizācijai (DIPA) #ESIF

Pētījums par datorredzes paņēmienu attīstību industrijas procesu norises automatizācijai (DIPA) #ESIF

-

Advanced packaging for photonics, optics and electronics for low cost manufacturing in Europe (APPLAUSE) #H2020

Advanced packaging for photonics, optics and electronics for low cost manufacturing in Europe (APPLAUSE) #H2020

-

Digital Technologies, Advanced Robotics and increased Cyber-security for Agile Production in Future European Manufacturing Ecosystems (TRINITY) #H2020

Digital Technologies, Advanced Robotics and increased Cyber-security for Agile Production in Future European Manufacturing Ecosystems (TRINITY) #H2020

- Innovative technologies for acquisition and processing of biomedical images (InBiT) #ESIF

- Cyber-physical systems, ontologies and biophotonics for safe&smart city and society (GUDPILS) #SRP (VPP)

- 1.25: Research on development of mathematical model for silicon crystal growing technological process by using image processing methods (SiKA) #Contract research (Līgumpētījumi)

- Palm data acquisition and processing system (PALMs) #ESIF

- Multimodal biometric technology for safe and easy person authentication (BiTe) #ESIF

Publications

- Jānis Ārents, Ričards Cacurs, Modris Greitans, "Integration of Computervision and Artificial Intelligence Subsystems with Robot Operating System Based Motion Planning for Industrial Robots", Automatic Control and Computer Sciences Journal, Volume 52, Issue 5, 2018

- Novickis, R., Levinskis, A., Kadiķis, R., Feščenko. V., Ozols, K. (2020). Functional architecture for autonomous driving and its implementation. 17th Biennial Baltic Electronics Conference (BEC2020), Tallinn, Estonia.

- Justs, D., Novickis, R., Ozols, K., Greitāns M. (2020). Bird's-eye view image acquisition from simulated scenes using geometric inverse perspective mapping. 17th Biennial Baltic Electronics Conference (BEC2020), Tallinn, Estonia.

- Buls, E., Kadikis, R., Cacurs, R., & Ārents, J. (2019, March). Generation of synthetic training data for object detection in piles. In Eleventh International Conference on Machine Vision (ICMV 2018) (Vol. 11041, p. 110411Z)

- Martin Dendaluce Jahnke, Francesco Cosco, Rihards Novickis, Joshué Pérez Rastelli, Vicente Gomez-Garay "Efficient Neural Network Implementations on Parallel Embedded Platforms applied to Real-Time Torque-Vectoring Optimization using Predictions for Multi-Motor Electric Vehicles", Electronics (ISSN 2079-9292)

- N. Dorbe, R. Kadikis, K. Nesenbergs. “Vehicle type and licence plate localisation and segmentation using FCN and LSTM”, Proceedings of New Challenges of Economic and Business Development 2017, Riga, Latvia, May 18-20, 2017, pp. 143-151

- Kadikis, R. (2018, April). Recurrent neural network based virtual detection line. In Tenth International Conference on Machine Vision (ICMV 2017) (Vol. 10696, p. 106961V). International Society for Optics and Photonics

- BAUMS, A., GORDYUSINS, A., 2015. An evaluation the motion of a reconfigurable mobile robot over a rough terrain. Automatic Control and Computer Sciences, Allerton Press, Inc., vol.49. no.5, pp. 39- 45

- Physical model for solving problems of cost-effective mobile robot development

- Harijs Grinbergs, Artis Mednis, and Modris Greitans. Real-time object tracking in 3D space using mobile platform with passive stereo vision system. N. Tagoug (Ed.): Proceedings of World Congress on Multimedia and Computer Science (WCMCS 2013), pp. 60-68, 2013. Association of Computer Electronics and Electrical Engineers, 2013.

- J. Judvaitis, I. Homjakovs, R. Cacurs, K. Nesenbergs, A. Hermanis, K. Sudars, 2015. Improving object transparent vision using enhanced image selection, Automatic Control and Computer Sciences, Vol. 49, Nr. 6, 2015, pp

- Kadikis, R. (2015, April). Registration method for multispectral skin images. In 2015 25th International Conference Radioelektronika (RADIOELEKTRONIKA) (pp. 232-235). IEEE.

- Kadiķis, R., & Freivalds, K. (2013, December). Vehicle classification in video using virtual detection lines. In Sixth International Conference on Machine Vision (ICMV 2013) (Vol. 9067, p. 90670Y). International Society for Optics and Photonics.

Patents

- Patent No. EP2700054 B1 “System and method for video-based vehicle detection”

- Patent No. EP 2695104 B1 “Biometric authentication apparatus and biometric authentication method”

- LR patent No.14998. The device for skin melanoma distinguishing from benign naevus

- LR patents Nr. 13942 “Digitālu pelēko toņu attēlu analizators”

- LR patents Nr. 13857 “Digitālu rentgena attēlu analizators svešķermeņu atklāšanai objektos reālā laika režīmā”